Changelog

v1.4 - Skills: Let AI Assistants Control Your Devices

v1.4 introduces Midscene Skills — a set of installable skill packs for AI assistants like Claude Code and OpenClaw, enabling them to directly control browsers, desktops, Android, and iOS devices. This release also includes a standalone desktop MCP service, independent CLI entry points for each platform package, enhanced AI planning, and more.

Midscene Skills — Device Control Skills for AI Assistants

Midscene Skills is a set of skill packs that can be installed into AI assistants like Claude Code and OpenClaw. Once installed, AI assistants can control browsers, desktops, Android, and iOS devices using natural language.

Each platform package (@midscene/android, @midscene/ios, @midscene/web, etc.) now exposes an independent CLI entry point, which is the foundation that Skills is built upon.

Supported Platforms:

- Browser (Puppeteer headless mode)

- Chrome Bridge (user's own desktop Chrome)

- Desktop (macOS, Windows, Linux)

- Android (via ADB)

- iOS (via WebDriverAgent)

See: Midscene Skills

Standalone Desktop Automation MCP Package

New @midscene/computer-mcp package provides PC desktop automation as a standalone MCP service. Developers can use desktop automation capabilities directly in MCP-compatible tools like Cursor and Trae without additional integration.

See docs: PC Desktop Automation

Chrome Extension MCP Background Connection

The Chrome extension now supports background Bridge mode MCP connection, exposing the desktop browser as an MCP tool to AI assistants, further expanding the MCP ecosystem.

AI Planning Enhancements

- New

deepLocateoption foraiAct: Enable deep locating during action execution, improving element locating accuracy in complex interfaces - Swipe vs DragAndDrop semantic distinction: The model can now more precisely distinguish between swipe and drag-and-drop operations, reducing gesture planning errors

- LLM planning page navigation restrictions: Prevents the model from generating unreasonable page navigation during planning, improving task execution stability

- AppleScript keyboard input on macOS: Improved keyboard input stability and compatibility in desktop automation

- Cursor move action: New cursor move action support

YAML Scripts & File Upload Enhancements

- YAML

aiTapsupportsfileChooserAccept: Handle file upload dialogs directly in YAML scripts - Directory upload support: Web supports

webkitdirectorytype folder selection upload

Chrome Extension Bridge Mode Caching

Bridge mode now supports caching, reusing existing AI planning results to reduce repeated calls and improve debugging efficiency.

Android Improvements

- Optimized text input logic for improved input stability

iOS Improvements

- Playground live screen stream: iOS Playground now features live screen preview for real-time device monitoring during debugging.

v1.3 - PC Desktop Automation Support

v1.3 introduces brand new PC desktop automation capabilities, significantly improves Android screenshot performance, and brings multiple enhancements to report system and stability.

New PC Desktop Automation Support

Midscene now supports PC desktop automation on Windows, macOS, and Linux, driving native keyboard and mouse controls. Whether it's Electron, Qt, WPF, or native desktop applications, they can all be automated through the visual model approach.

Core Capabilities:

- Mouse Operations: click, double-click, right-click, mouse move, drag and drop

- Keyboard Input: text input, key combinations (Cmd/Ctrl/Alt/Shift)

- Screenshots: capture screenshots from any monitor

- Multi-monitor Support: operate across multiple displays simultaneously

Usage Methods:

- Zero-code trial via Computer Playground

- JavaScript SDK for scripting

- YAML automation scripts and CLI tools

- HTML report playback for all operation paths

See documentation: PC Desktop Automation

Major Android Screenshot Performance Improvement

With Scrcpy screenshot mode enabled, screenshot time drops from 500–2000ms to 100–200ms, significantly improving Android automation response speed. This is particularly useful for remote device debugging and high frame rate scenarios.

See documentation: Scrcpy Screenshot Mode

Deep Thinking Mode Enhancement

The aiAct deep thinking (deepThink) mode now not only helps with element location but also optimizes overall task planning, achieving better execution results in complex forms and multi-step workflows.

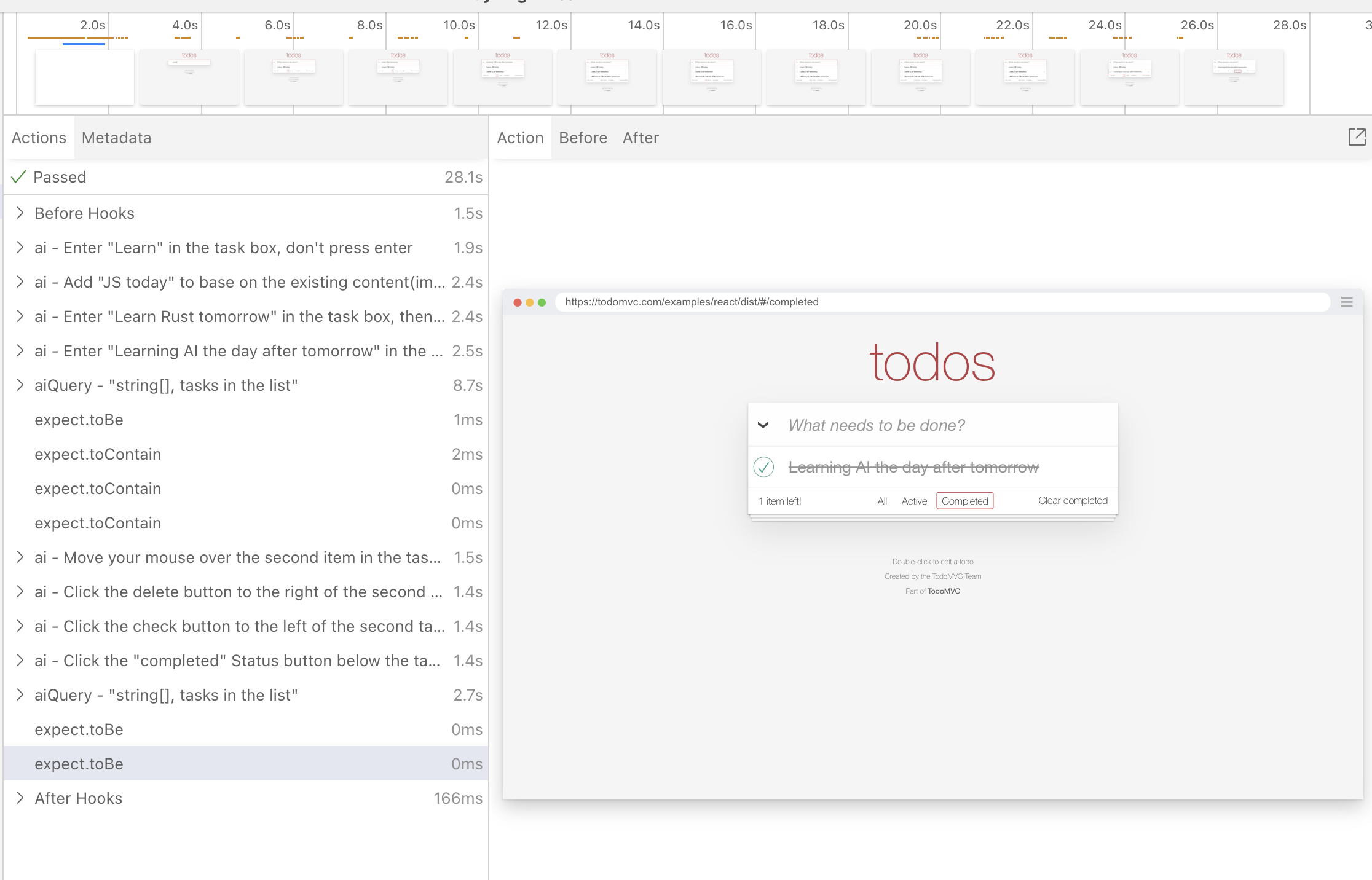

Report Experience Optimization

- Timeline Collapse: New collapse toggle button for easier viewing of long task flows

- Time Unit Changed to Seconds: More readable

- Step Sync Highlighting: Sidebar step highlighting syncs in real-time with player playback

- Reduced Memory Usage: Optimized report generation mechanism to effectively reduce runtime memory usage

Mobile Platform Improvements

Android

- More stable special character and Unicode input

- More relaxed app package name matching for Launch action (ignores case and spaces)

- Auto-retry when screenshot anomalies occur on certain devices

iOS

- More relaxed Bundle ID matching (ignores case and spaces)

Web Automation Improvements

- Fixed issue where Puppeteer could hang when taking screenshots of inactive tabs

- Fixed inaccurate window size in headed mode

shareBrowserContextmode now supports preserving localStorage and sessionStorage- Playwright multi-project configuration automatically distinguishes test cases by browser in reports

- Fixed

typeOnlymode not working in YAML script input actions

Other Improvements

- Image processing performance improved

- SVG icon cache issue fixed

- Playground now displays specific reasons for model configuration errors

v1.2 - Zhipu AI Open-Source Model Support and File Upload Support

v1.2 introduces support for Zhipu AI open-source models, adds file upload functionality, and fixes several issues affecting user experience, making automated testing more reliable.

New Zhipu AI Open-Source Model Support

Zhipu GLM-V Vision Model

- Zhipu GLM-V series models are open-source vision models launched by Zhipu AI, available in multiple parameter versions, supporting both cloud deployment and local deployment.

- See: GLM-V Model Configuration

Zhipu AutoGLM Mobile Automation Model

- Zhipu AutoGLM is an open-source mobile automation model launched by Zhipu AI. It can understand mobile screen content based on natural language instructions, and combined with intelligent planning capabilities, generate operation processes to complete user needs.

- See: AutoGLM Model Configuration

File upload feature

File upload is a common requirement in Web automation scenarios. v1.2 adds file upload capability for the web, supporting natural language operations for file input boxes, making form automation more complete.

See: aiTap file upload

Cache mechanism optimization

Fixed the issue where cache wasn't updated after DOM changes. When page DOM changes cause cache validation to fail, the system now automatically updates the cache, avoiding operation failures due to stale cache and improving automation script stability.

Report and Playground improvements

Deep thinking tag optimization

- Fixed the issue where deepThink tags weren't displayed correctly in reports when using

.aiAct()method with deep thinking. Now you can clearly see which operations used deep thinking capability in reports - Improved the style of summary rows in reports for better readability

Playground stability improvements

- Fixed the issue where Playground didn't properly create agent instances in

getActionSpacewhen using agentFactory mode, ensuring normal operation across various usage modes - Optimized Playground output display to prevent overly long reportHTML content from affecting the interface

Model configuration updates

Updated configuration parameters for Qwen model's deep thinking functionality to ensure compatibility with the latest model version.

v1.1 - aiAct deep thinking and extensible MCP SDK

v1.1 optimizes model planning capabilities and MCP extensibility, making automation more stable in complex scenarios while providing more flexible solutions for enterprise MCP service deployments.

aiAct can enable deep thinking (deepThink)

When deep thinking is enabled in aiAct, the model will interpret intent more thoroughly and optimize its planning results. This is suited for complex forms, multi-step flows, and similar scenarios. It improves accuracy but increases planning latency.

Currently supported: Qwen3-vl on Alibaba Cloud and Doubao-vision on Volcano Engine. See Model strategy for details.

Example usage:

MCP extension and SDK exposure

Developers can use the MCP SDK exposed by Midscene to flexibly deploy a public MCP service. This capability applies to Agent instances on any platform.

Typical application scenarios:

- Run MCP in enterprise intranet to control private device pools

- Package Midscene capabilities as internal microservices for multiple teams

- Extend custom automation toolchains

See documentation: MCP Services

Chrome extension improvements

- Fixed potential event loss during recording, improving recording stability

- Optimized coordinate passing in

describeElementfor better element description accuracy

CLI and configuration enhancements

- File parameter support: Fixed CLI issue where

--filesparameter wasn't properly handled when--configwas specified; now they can be flexibly combined - Dynamic configuration: Fixed Playground not reading the

MIDSCENE_REPLANNING_CYCLE_LIMITenvironment variable properly

iOS Agent compatibility improvements

- Optimized

getWindowSizemethod to automatically fall back to legacy endpoint when newer API is unavailable, improving compatibility with WebDriverAgent versions

Report and Playground improvements

- Fixed issue where report wasn't properly initialized before accessing screen properties

- Fixed abnormal behavior of stop function in Playground

- Improved error handling during video export to avoid crashes caused by frame cancel

Thanks to contributors: @FriedRiceNoodles

v1.0 - Midscene v1.0 is here!

Midscene v1.0 is here! Try it out today and see how it can help you automate your workflows.

See our new Showcases page for real-world examples

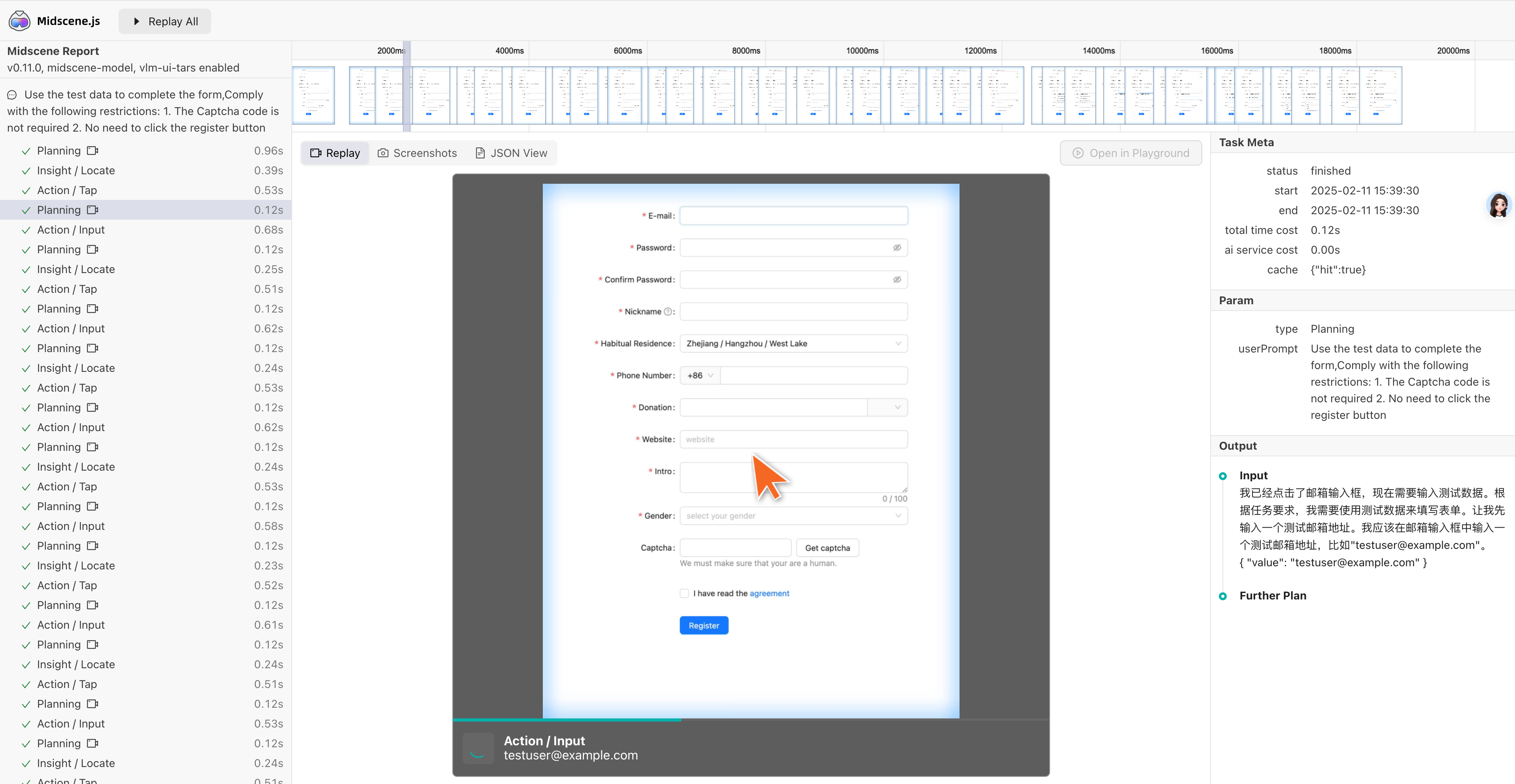

Register the GitHub form autonomously in a web browser and pass all field validations:

Plus these real-world showcases:

- iOS Automation - Meituan coffee order

- iOS Automation - Auto-like the first @midscene_ai tweet

- Android Automation - DCar: Xiaomi SU7 specs

- Android Automation - Booking a hotel for Christmas

- MCP Integration - Midscene MCP UI prepatch release

Some community developers have successfully built on Midscene's capability to integrate with any interface, extending it with a robotic arm plus vision and voice models for in-vehicle large-screen testing scenarios. See the video below.

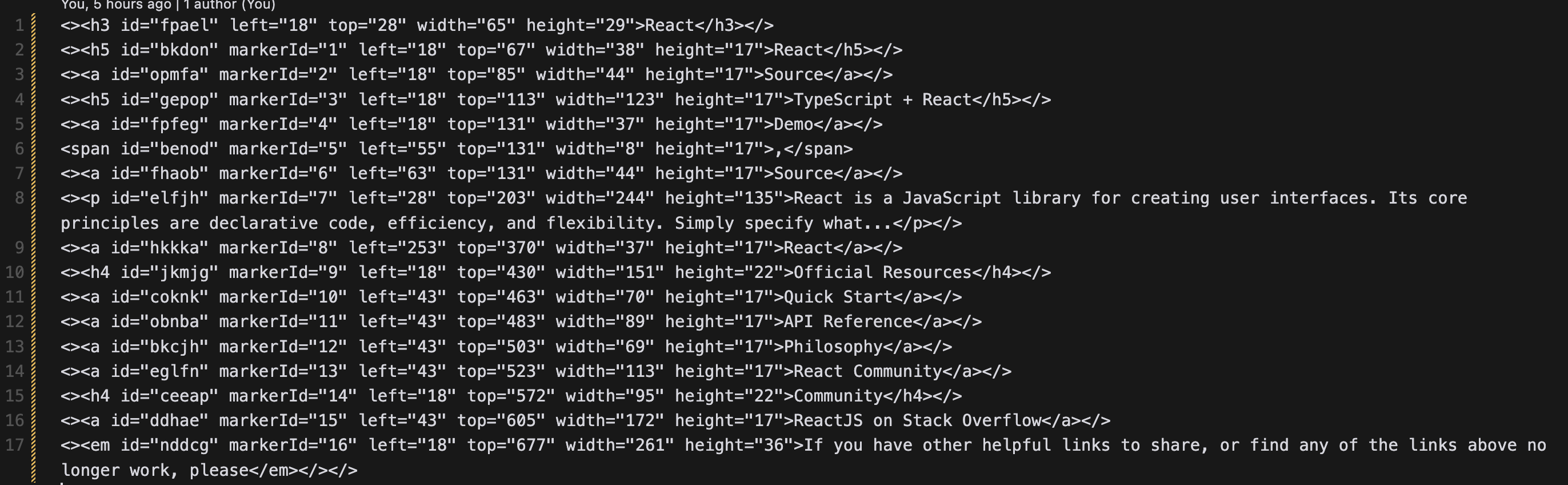

🚀 Pure vision path

Starting in v1.0, Midscene fully adopts a visual-understanding approach to deliver more stable UI automation. Visual models provide:

- Stable results: Leading vision models (Doubao Seed 1.6, Qwen3-VL, etc.) are reliable enough for most production needs

- UI workflow planning: Vision models generally excel at planning UI flows and can handle many complex task sequences

- Works everywhere: Automation no longer depends on the rendering stack—Android, iOS, desktop apps, or a browser

<canvas>: if you can capture a screenshot, Midscene can interact with it - Developer-friendly: Dropping selectors and DOM keeps prompts simpler; teammates unfamiliar with rendering tech can become productive quickly

- Far fewer tokens: Removing DOM extraction cuts token usage by about 80%, lowering cost and speeding up local runs

- Open-source options: Open-source vision models like Qwen3-VL 8B/30B perform well, enabling self-hosted deployments

See our updated Model strategy for details.

🚀 Better results by multiple model combinations

Beyond the default interaction intent, Midscene defines Planning and Insight intents, and developers can enable dedicated models for each. For example, you can use a GPT model for planning while using the default Qwen3-VL model for element localization.

Multi-model combinations let you scale up handling of complex requirements as needed.

🚀 Runtime architecture optimization

- Reuse portions of context to reduce device-info calls and improve runtime performance

- Optimize Action Space combinations for Web and mobile environments to provide models with a more useful toolset

🚀 Report improvements

- Parameter view: mark interaction parameter locations, merge screenshot context, and identify model planning results faster

- Style updates: dark mode report rendering for better readability

- Token usage display: summarize token consumption by model to analyze cost across scenarios

🚀 MCP architecture redesign

We redefined Midscene MCP services around vision-driven UI operations. Each Action in the iOS / Android / Web Action Space is exposed as an MCP tool, providing atomic operations so developers can focus on higher-level Agents without worrying about UI operation details, while keeping success rates high. See MCP architecture.

🚀 Mobile automation

iOS improvements

- Added compatibility across WebDriverAgent 5.x–7.x

- Added WebDriver Clear API support to solve dynamic input field issues

- Improved device compatibility

Android improvements

- Added screenshot polling fallback to improve remote device stability

- Added automatic screen-orientation adaptation (displayId screenshots)

- Added YAML script support for

runAdbShell

Cross-platform

- Expose system operation helpers on the Agent instance, including Home, Back, RecentApp, and more

🚧 API changes

Method renames (backward compatible)

- Renamed

aiAction()→aiAct()(old method kept with deprecation warning) - Renamed

logScreenshot()→recordToReport()(old method kept with deprecation warning)

Environment variable renames (backward compatible)

- Renamed

OPENAI_API_KEY→MIDSCENE_MODEL_API_KEY(new variable preferred, old variable as fallback) - Renamed

OPENAI_BASE_URL→MIDSCENE_MODEL_BASE_URL(new variable preferred, old variable as fallback)

⬆️ Upgrade to the latest version

Upgrade only the packages you use. For example:

npm install @midscene/web@latest

npm install @midscene/android@latest

npm install @midscene/ios@latest

If you use the CLI installed globally:

npm i -g @midscene/cli

v0.30 - Cache management upgrade and mobile experience optimization

More flexible cache strategy

v0.30 improves the cache system, allowing you to control cache behavior based on actual needs:

- Multiple cache modes available: Supports read-only, write-only, and read-write strategies. For example, use read-only mode in CI environments to reuse cache, and use write-only mode in local development to update cache

- Automatic cleanup of unused cache: Agent can automatically clean up unused cache records when destroyed, preventing cache files from accumulating

- Simplified unified configuration: Cache configuration parameters for CLI and Agent are now unified, no need to remember different configurations

Report management convenience

- Support for merging multiple reports: In addition to playwright scenarios, all scenarios now support merging multiple automation execution reports into a single file for centralized viewing and sharing of test results

Mobile automation optimization

iOS platform improvements

- Real device support improvement: Removed simctl check restriction, making iOS real device automation smoother

- Auto-adapt device display: Implemented automatic device pixel ratio detection, ensuring accurate element positioning on different iOS devices

Android platform enhancements

- Flexible screenshot optimization: Added

screenshotResizeRatiooption, allowing you to customize screenshot size while ensuring visual recognition accuracy, reducing network transmission and storage overhead - Screen info cache control: Use

alwaysRefreshScreenInfooption to control whether to fetch screen information each time, allowing cache reuse in stable environments to improve performance - Direct ADB command execution: AndroidAgent added

runAdbCommandmethod for convenient execution of custom device control commands

Cross-platform consistency

- ClearInput support on all platforms: Solves the problem of AI being unable to accurately plan clear input operations across platforms

Feature enhancements

- Failure classification: CLI execution results can now distinguish between "skipped failures" and "actual failures", helping locate issue causes

- aiInput append mode: Added

appendoption to append input while preserving existing content, suitable for editing scenarios - Chrome extension improvements:

- Popup mode preference saved to localStorage, remembering your choice on next open

- Bridge mode supports auto-connect, reducing manual operations

- Support for GPT-4o and non-visual language models

Type safety improvements

- Zod schema validation: Introduced type checking for action parameters, detecting parameter errors during development to avoid runtime issues

- Number type support: Fixed

aiInputsupport for number type values, making type handling more robust

Bug fixes

- Fixed potential issues caused by Playwright circular dependencies

- Fixed issue where

aiWaitForas the first statement could not generate reports - Improved video recorder delay logic to ensure the last frame is captured

- Optimized report display logic to view both error information and element positioning information simultaneously

- Fixed issue where

cacheableoption inaiActionsubtasks was not properly passed

Community

- Awesome Midscene section added midscene-java community project

v0.29 - iOS platform support added

iOS platform support added

The biggest highlight of v0.29 is the official introduction of iOS platform support! Now you can connect and automate iOS devices through WebDriver, extending Midscene's powerful AI automation capabilities to the Apple ecosystem, details: Support iOS automation.

Qwen3-VL model adaptation

We've adapted the latest Qwen Qwen3-VL model, giving developers faster and more accurate visual understanding capabilities. See Model strategy.

AI core capability enhancement

- UI-TARS Model Performance Optimization: Optimized aiAct planning, improved dialogue history management, and provided better context awareness capabilities

- AI Assertion and Action Optimization: We updated the prompt for

aiAssertand optimized the internal implementation ofaiAct, making AI-driven assertions and action execution more precise and reliable

Reporting and debugging experience optimization

- URL Parameter Playback Control: To improve debugging experience, you can now directly control the default behavior of report playback through URL parameters

Documentation

- Updated documentation deployment cache strategy to ensure users can access the latest documentation content in time

v0.28 - Build your own GUI automation agent by integrating with your own interface (preview feature)

Support for integration with any interface (preview feature)

v0.28 introduces the capability to integrate with your own interface. Define an interface controller class that conforms to the AbstractInterface definition, and you can get a fully-featured Midscene Agent.

The typical use case for this feature is to build a GUI automation Agent for your own interface, such as IoT devices, in-house applications, car displays, etc.!

Combined with the universal Playground architecture and SDK enhancement features, developers can conveniently debug custom devices.

For more information, please refer to Integrate with Any Interface (Preview Feature)

Android platform optimization

- Planning Cache Support: Added planning cache functionality for Android platform, improving execution efficiency

- Input Strategy Enhancement: Optimized input clearing strategy based on IME settings, improving Android platform input experience

- Scroll Calculation Improvement: Optimized scroll endpoint calculation algorithm for Android platform

Gesture operation extension

- Double-Click Operation Support: Added support for double-click actions

- Long Press and Swipe Gestures: Added support for long press and swipe gestures

Core function enhancement

- Agent Configuration Isolation: Implemented model configuration isolation between different agents, avoiding configuration conflicts

- Execution Option Extension: Added useCache and replanningCycleLimit configuration options for Agent, providing more fine-grained control

- YAML Script Support: Support for running universal custom devices through YAML scripts, enhancing automation capabilities

Bug fixes

- Fixed Qwen model search region size issues

- Optimized deepThink parameter handling and rectangle size calculation

- Resolved issues related to Playwright double-click operations

- Improved TEXT action type processing logic

Documentation and community

- Added custom interface documentation to help developers better extend functionality

- Added Awesome Midscene section in README to showcase community projects

v0.27 - Core module refactoring, assertions and reports functionally enhanced

Core module refactoring

Based on the introduction of Rslib in v0.26 to improve development experience and reduce contribution thresholds, v0.27 takes it a step further by refactoring the core modules on a large scale. This makes it extremely easy to extend new devices and add new AI operations, and we sincerely welcome community developers to contribute!

Due to the wide scope of this refactoring, please feel free to report any issues you encounter after upgrading, and we will address them promptly.

API enhancement

aiAssertFunctionally Enhanced- New

namefield allows naming different assertion tasks, making it easier to identify and parse in JSON output results - New

domIncludedandscreenshotIncludedoptions allow flexible control over whether to send DOM snapshots and page screenshots to AI

- New

Chrome extension playground upgrade

- All Agent APIs can be directly debugged and run in the Playground! Interactive, extraction, and verification cover three major categories of methods, with visual operations and verification that boost your automation development efficiency! Come experience the truly versatile AI automation platform! 🚀

Report function optimization

- New Marking Layer Switch: The report player has added a switch to hide the marking layer, allowing users to view the original page view without obstruction when playing back.

Bug fixes

- Fixed the problem that

aiWaitForsometimes caused the report to not be generated - Reduced memory consumption of Playwright plugin

v0.26 - Toolchain fully integrated Rslib, greatly improving development experience and reducing contribution threshold

Web integration optimization

- Support freezing page context(freezePageContext/unfreezePageContext), so that all subsequent operations reuse the same page snapshot, avoiding repeated page status acquisition

- Add all agent APIs to Playwright fixture, simplify test script writing, and solve the problem of not generating reports when using agentForPage

Android automation enhancement

- New keyboard hiding strategy(keyboardDismissStrategy), allowing you to specify the way to automatically hide the keyboard

Report function optimization

- Report content lazy parsing, solving the problem of report crash when the report is large

- Report player adds automatic zoom switch, making it easier to view the global perspective playback

- Support aiAssert / aiQuery tasks in report playback, to fully show the entire page change process

- Fix the problem that the sidebar status is not displayed as a failure icon when the assertion fails

- Fix the problem that the drop-down filter in the report cannot be switched

Build and engineering

- Build tool migration to Rslib library development tool, improving build efficiency and development experience

- Full repository source code jump, making it easier for developers to view source code

- MCP npm package product volume optimization, from 56M to 30M, greatly improving loading speed

Bug fixes

- CLI automatically opens headed mode when keepWindow is true

- Fix the implementation problem of getGlobalConfig, solve the problem of abnormal environment variable initialization

- Ensure that the mime-type in base64 encoding is correct

- Fix the return value type of aiAssert task

v0.25 - Support using images as AI prompt input

Core function enhancement

- New worker runtime support, support running in worker environment

- Support using images as AI prompt input, see Prompting with images

- Image processing upgrade, using Photon & Sharp for efficient image cropping

Web integration optimization

- Get XPath by coordinates, improve cache reproducibility

- Cache file moves plan module to the front, improving readability

- Chrome Recorder supports exporting all events to markdown documents

- agent supports specifying HTML report name, see reportFileName

Android automation enhancement

- Long press gesture support

- Pull-to-refresh support

Bug fixes

- Use global config to handle environment variables, avoid issues caused by multiple packaging

- Manually construct error information when error object serialization fails

- Fix playwright report type dependency declaration order issue

- Fix MCP packaging issue

Documentation AI-friendly

- LLMs.txt is now available in both Chinese and English, making it easier for AI to understand

- Each document now has a copy-to-markdown button, making it easier to feed to AI

Other function enhancement

- Chrome Recorder supports aiScroll function

- Refactor aiAssert to be consistent with aiBoolean

v0.24 - MCP for Android automation

MCP for Android automation

- You can now use Midscene MCP to automate Android apps, just like you use it for web apps. Read more: MCP for Android Automation

Optimization

- For Mac platform Puppeteer, a double input clearing mechanism has been added to ensure that the input box is cleared before input

Development experience

- Simplify the way to build

htmlElement.jsto avoid report template build issues caused by circular dependencies - Optimize development workflow, just use

npm run devto enter midscene project development

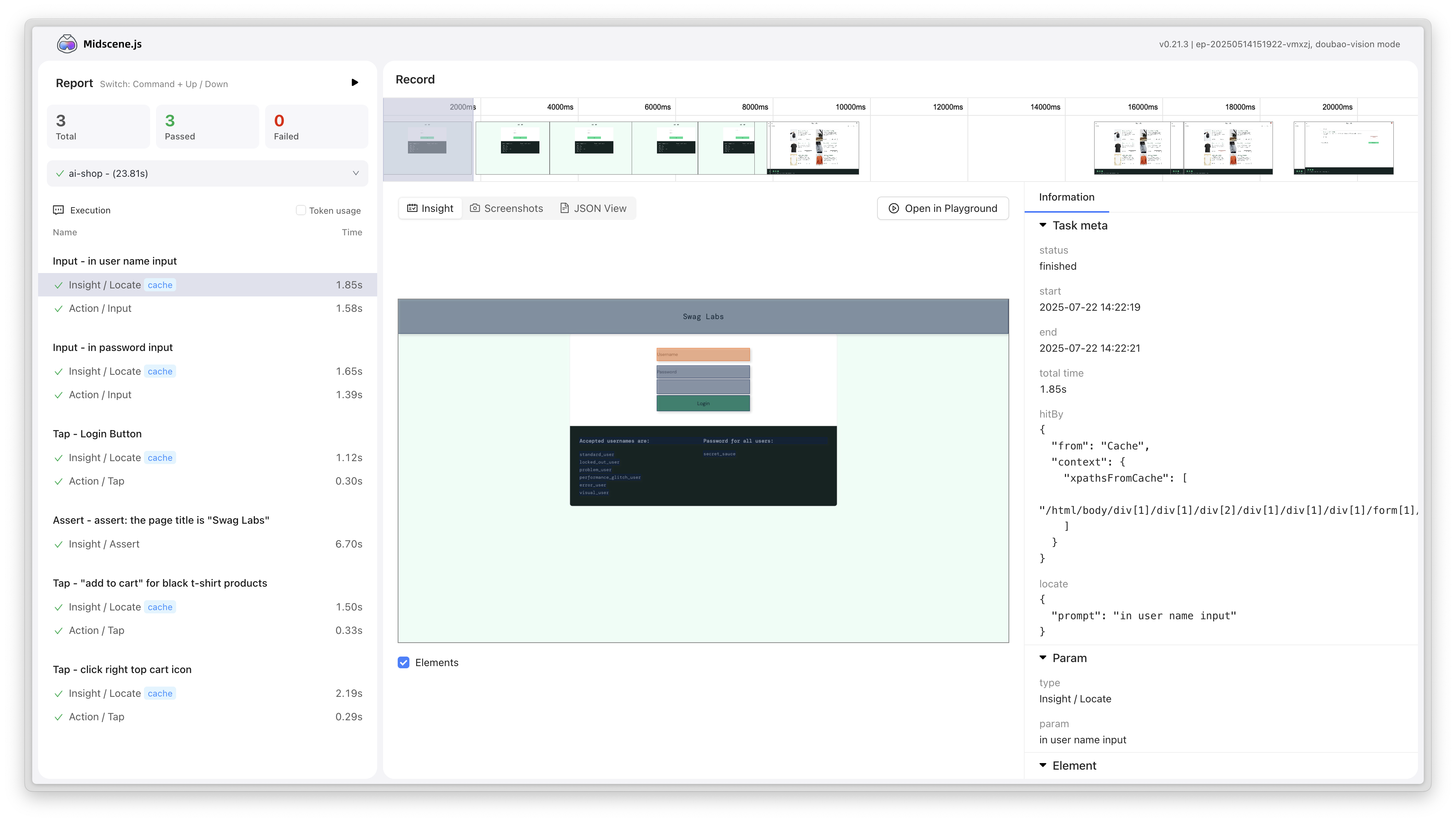

v0.23 - New report style and YAML script ability enhancement

Report system upgrade

New report style

- New report style design, providing clearer and more beautiful test result display

- Optimize report layout and visual effects, improve user reading experience

- Enhance report readability and information hierarchy structure

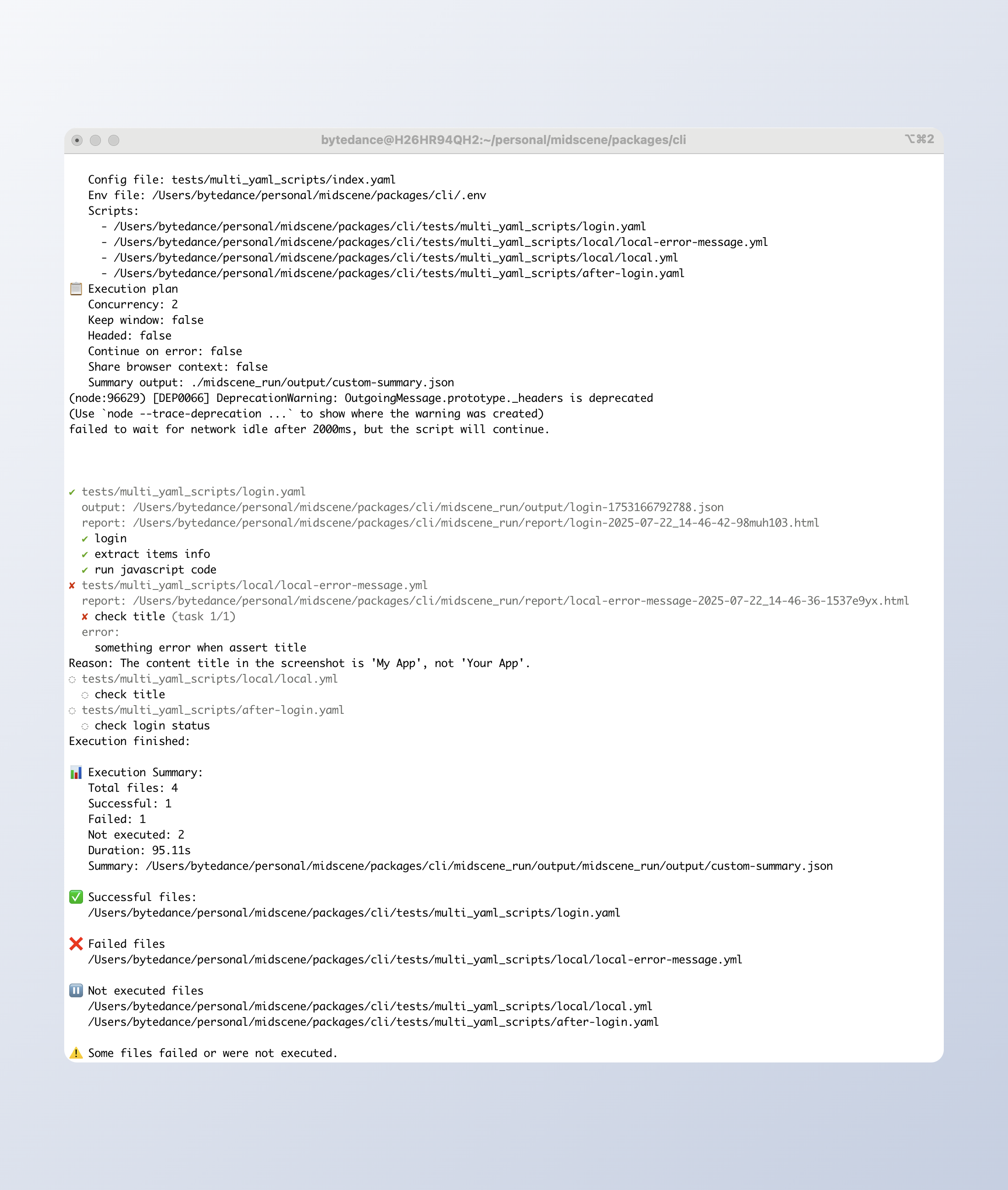

YAML script ability enhancement

Support multiple YAML files batch execution

- New config mode support, support configure Yaml file running order, browser reuse strategy, parallelism

- Support getting JSON format running results

Test coverage enhancement

Android test enhancement

- New Android platform related test cases, improve code quality and stability

- Improve test coverage, ensure the reliability of Android features

v0.22- Chrome extension recording function released

Web integration enhancement

New recording function

- Chrome extension adds recording function, which can record user operations on the page and generate automation scripts

- Support recording click, input, scroll and other common operations, greatly reducing the threshold for writing automation scripts

- The recorded operations can be directly played back and debugged in the Playground

Upgrade to IndexedDB for storage

- Chrome extension's Playground and Bridge now use IndexedDB for data storage

- Compared to the previous storage scheme, it provides larger storage capacity and better performance

- Support storing more complex data structures, laying the foundation for future feature extensions

Customize replanning cycle limit

- Set the

MIDSCENE_REPLANNING_CYCLE_LIMITenvironment variable to customize the maximum number of re-planning cycles allowed when executing operations (aiAct). - The default value is 10. When the AI needs to re-plan more than this limit, an error will be thrown and suggest splitting the task.

- Provide more flexible task execution control, adapting to different automation scenarios

Android interaction optimization

New screenshot path generation

- Generate a unique file path for each screenshot to avoid file overwrite issues

- Improve stability in concurrent test scenarios

v0.21 - Chrome extension UI upgrade

Web integration enhancement

New chat-style user interface

- New chat-style user interface design for better user experience

Flexible timeout configuration

- Supports overriding timeout settings from test fixture, providing more flexible timeout control

- Applicable scenarios: Different test cases require different timeout settings

Unified Puppeteer and Playwright configuration

- New

waitForNavigationTimeoutandwaitForNetworkIdleTimeoutparameters for Playwright - Unified timeout options configuration for Puppeteer and Playwright, providing consistent API experience, reducing learning costs

New data export callback mechanism

- New

agent.onDumpUpdatecallback function, can get real-time notification when data is exported - Refactored the post-task processing flow to ensure the correct execution of asynchronous operations

- Applicable scenarios: Monitoring or processing exported data

Android interaction optimization

Input experience improvement

- Changed click input to slide operation, improving interaction response and stability

- Reduced operation failures caused by inaccurate clicks

v0.20 - Support for assigning XPath to locate elements

Web integration enhancement

New aiAsk method

- Allows direct questioning of the AI model to obtain string-formatted answers for the current page.

- Applicable scenarios: Tasks requiring AI reasoning such as Q&A on page content and information extraction.

- Example:

Support for passing XPath to locate elements

- Location priority: Specified XPath > Cache > AI model location.

- Applicable scenarios: When the XPath of an element is known and the AI model location needs to be skipped.

- Example:

Android improvement

Playground tasks can be cancelled

- Supports interrupting ongoing automation tasks to improve debugging efficiency.

Enhanced aiLocate API

- Returns the Device Pixel Ratio, which is commonly used to calculate the real coordinates of elements.

Report generation optimization

Improve report generation mechanism, from batch storage to single append, effectively reducing memory usage and avoiding memory overflow when the number of test cases is large.

v0.19 - Support for getting complete execution process data

New API for getting Midscene execution process data

Add the _unstableLogContent API to the agent. Get the execution process data of Midscene, including the time of each step, the AI tokens consumed, and the screenshot.

The report is generated based on this data, which means you can customize your own report using this data.

Read more: API documentation

CLI support for adjusting Midscene env variable priority

By default, dotenv does not override the global environment variables in the .env file. If you want to override, you can use the --dotenv-override option.

Read more: Use YAML-based Automation Scripts

Reduce report file size

Reduce the size of the generated report by trimming redundant data, significantly reducing the report file size for complex pages. The typical report file size for complex pages has been reduced from 47.6M to 15.6M!

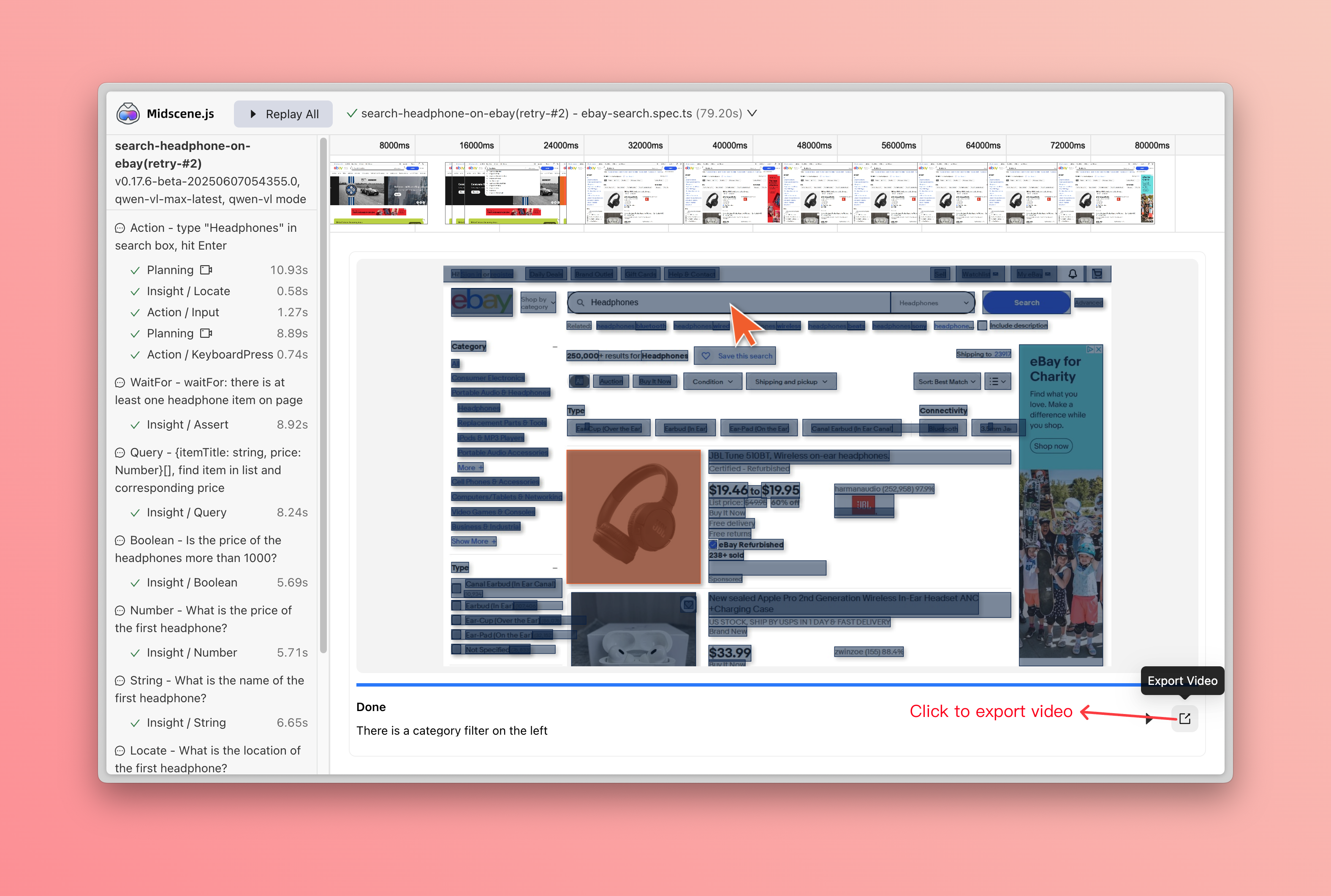

v0.18 - Enhanced reporting features

🚀 Midscene has another update! It makes your testing and automation processes even more powerful:

Custom node in report

- Add the

recordToReportAPI to the agent. Take a screenshot of the current page as a report node, and support setting the node title and description to make the automated testing process more intuitive. Applicable for capturing screenshots of key steps, error status capture, UI validation, etc.

- Example:

Support for downloading reports as videos

- Support direct video download from the report player, just by clicking the download button on the player interface.

- Applicable scenarios: Share test results, archive reproduction steps, and demonstrate problem reproduction.

More Android configurations exposed

-

Optimize input interactions in Android apps and allow connecting to remote Android devices

-

autoDismissKeyboard?: boolean- Optional parameter. Whether to automatically dismiss the keyboard after entering text. The default value is true. -

androidAdbPath?: string- Optional parameter. Used to specify the path of the adb executable file. -

remoteAdbHost?: string- Optional parameter. Used to specify the remote adb host. -

remoteAdbPort?: number- Optional parameter. Used to specify the remote adb port.

-

-

Examples:

Upgrade now to experience these powerful new features!

v0.17 - Let AI see the DOM of the page

Data query API enhanced

To meet more automation and data extraction scenarios, the following APIs have been enhanced with the options parameter, supporting more flexible DOM information and screenshots:

agent.aiQuery(dataDemand, options)agent.aiBoolean(prompt, options)agent.aiNumber(prompt, options)agent.aiString(prompt, options)

New options parameter

domIncluded: Whether to pass the simplified DOM information to AI model, default is off. This is useful for extracting attributes that are not visible on the page, like image links.screenshotIncluded: Whether to pass the screenshot to AI model, default is on.

Code example

New right-click ability

Have you ever encountered a scenario where you need to automate a right-click operation? Now, Midscene supports a new agent.aiRightClick() method!

Function

Perform a right-click operation on the specified element, suitable for scenarios where right-click events are customized on web pages. Please note that Midscene cannot interact with the browser's native context menu after right-click.

Parameter description

locate: Describe the element you want to operate in natural languageoptions: Optional, supportsdeepThink(AI fine-grained positioning) andcacheable(result caching)

Example

A complete example

In this report file, we show a complete example of using the new aiRightClick API and new query parameters to extract contact data including hidden attributes.

Report file: puppeteer-2025-06-04_20-34-48-zyh4ry4e.html

The corresponding code can be found in our example repository: puppeteer-demo/extract-data.ts

Refactor cache

Use xpath cache instead of coordinates, improve cache hit rate.

Refactor cache file format from json to yaml, improve readability.

v0.16 - Support MCP

Midscene MCP

🤖 Use Cursor / Trae to help write test cases. 🕹️ Quickly implement browser operations akin to the Manus platform. 🔧 Integrate Midscene capabilities swiftly into your platforms and tools.

Read more: MCP

Support structured API for agent

APIs: aiBoolean, aiNumber, aiString, aiLocate

Read more: Use JavaScript to Optimize the AI Automation Code

v0.15 - Android automation unlocked!

Android automation unlocked!

🤖 AI Playground: natural‑language debugging 📱 Supports native, Lynx & WebView apps 🔁 Replayable runs 🛠️ YAML or JS SDK ⚡ Auto‑planning & Instant Actions APIs

Read more: Android automation

More features

- Allow custom midscene_run dir

- Enhance report filename generation with unique identifiers and support split mode

- Enhance timeout configurations and logging for network idle and navigation

- Adapt for gemini-2.5-pro

v0.14 - Instant actions

"Instant Actions" introduces new atomic APIs, enhancing the accuracy of AI operations.

Read more: Instant Actions

v0.13 - DeepThink mode

Atomic AI interaction methods

- Supports aiTap, aiInput, aiHover, aiScroll, and aiKeyboardPress for precise AI actions.

DeepThink mode

- Enhances click accuracy with deeper contextual understanding.

v0.12 - Integrate Qwen 2.5 VL

Integrate Qwen 2.5 VL's native capabilities

- Keeps output accuracy.

- Supports more element interactions.

- Cuts operating cost by over 80%.

v0.11.0 - UI-TARS model caching

UI-TARS model support caching

-

Enable caching by document 👉 : Enable Caching

-

Enable effect

Optimize DOM tree extraction strategy

- Optimize the information ability of the dom tree, accelerate the inference process of models like GPT 4o

v0.10.0 - UI-TARS model released

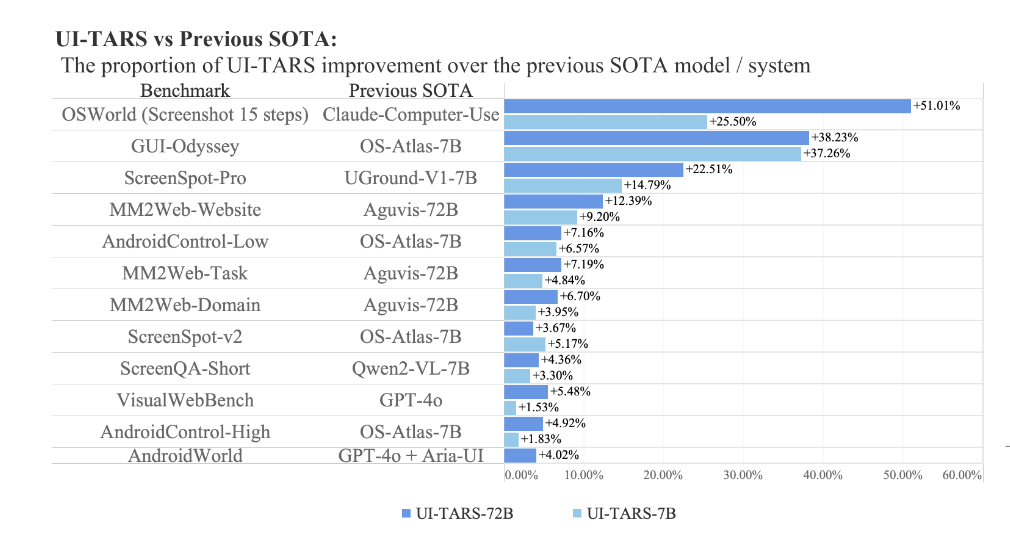

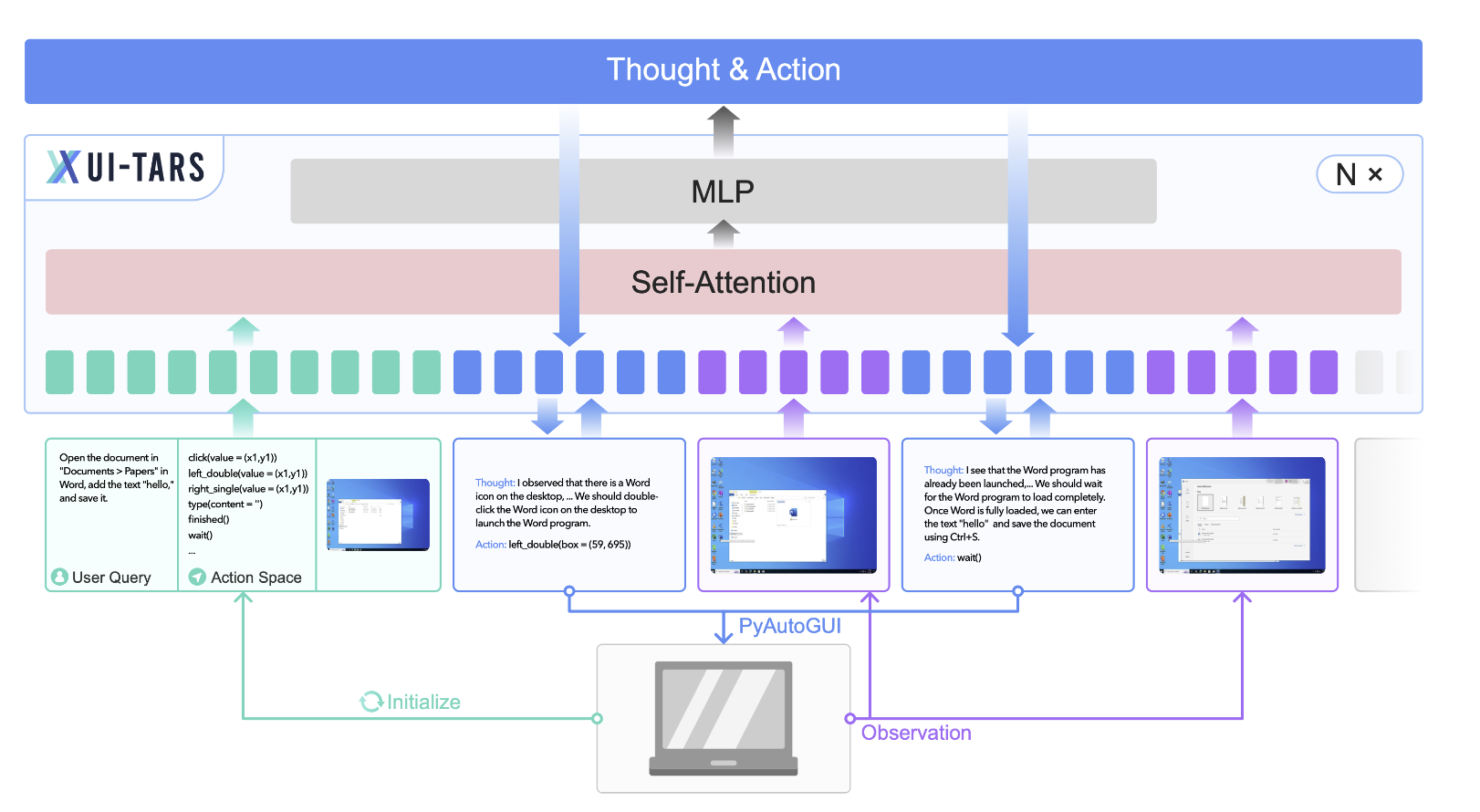

UI-TARS is a Native GUI agent model released by the Seed team. It is named after the TARS robot in the movie Star Trek, which has high intelligence and autonomous thinking capabilities. UI-TARS takes images and human instructions as input information, can correctly perceive the next action, and gradually approach the goal of human instructions, leading to the best performance in various benchmark tests of GUI automation tasks compared to open-source and closed-source commercial models.

UI-TARS: Pioneering Automated GUI Interaction with Native Agents - Figure 1

UI-TARS: Pioneering Automated GUI Interaction with Native - Figure 4

Model advantage

UI-TARS has the following advantages in GUI tasks:

-

Target-driven

-

Fast inference speed

-

Native GUI agent model

-

Private deployment without data security issues

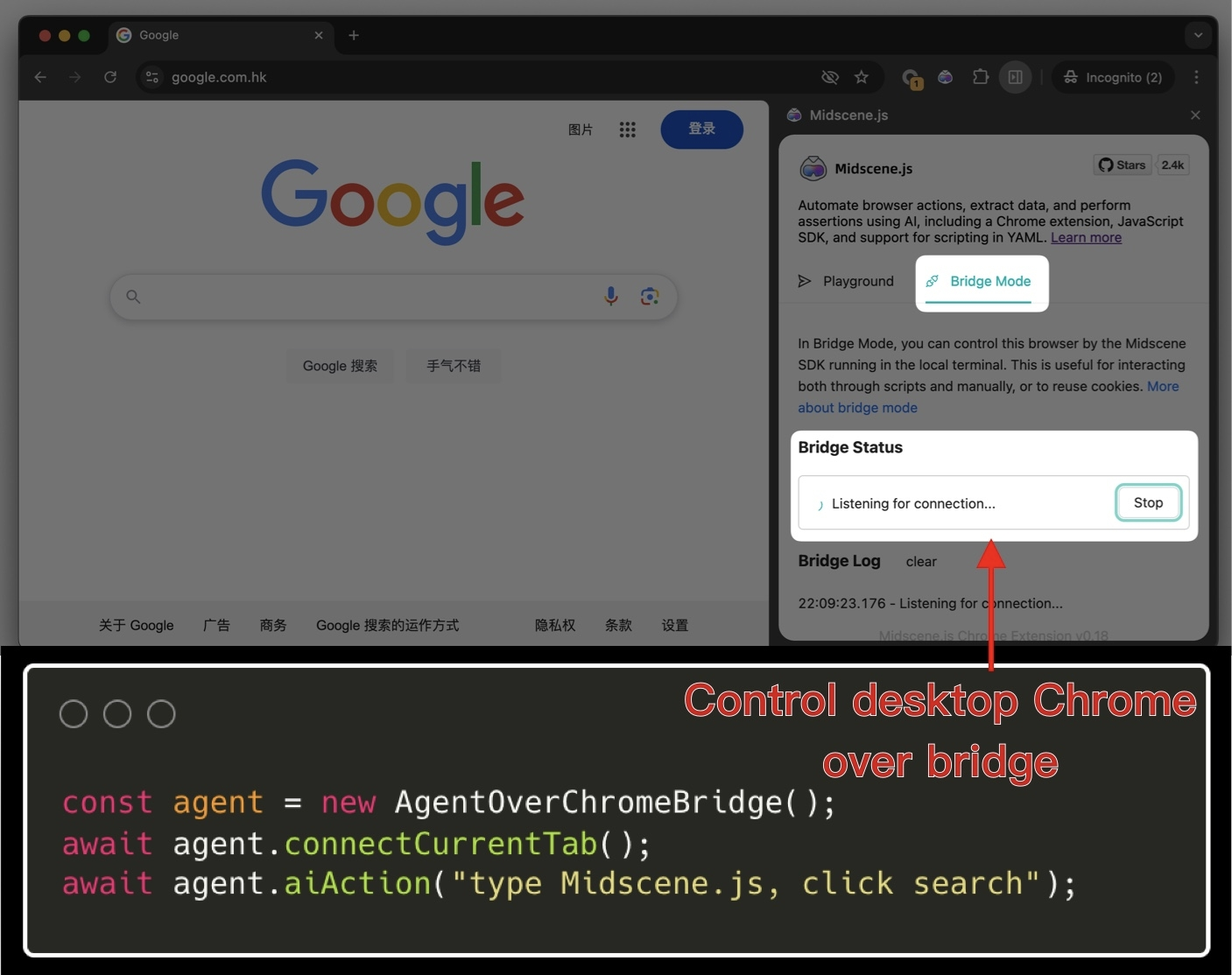

v0.9.0 - Bridge mode released

With the Midscene browser extension, you can now use scripts to link with the desktop browser for automated operations!

We call it "Bridge Mode".

Compared to previous CI environment debugging, the advantages are:

-

You can reuse the desktop browser, especially Cookie, login state, and front-end interface state, and start automation without worrying about environment setup.

-

Support manual and script cooperation to improve the flexibility of automation tools.

-

Simple business regression, just run it locally with Bridge Mode.

Documentation: Use Chrome Extension to Experience Midscene

v0.8.0 - Chrome extension

New Chrome extension, run Midscene anywhere

Through the Midscene browser extension, you can run Midscene on any page, without writing any code.

Experience it now 👉: Use Chrome Extension to Experience Midscene

v0.7.0 - Playground ability

Playground ability, debug anytime

Now you don't have to keep re-running scripts to debug prompts!

On the new test report page, you can debug the AI execution results at any time, including page operations, page information extraction, and page assertions.

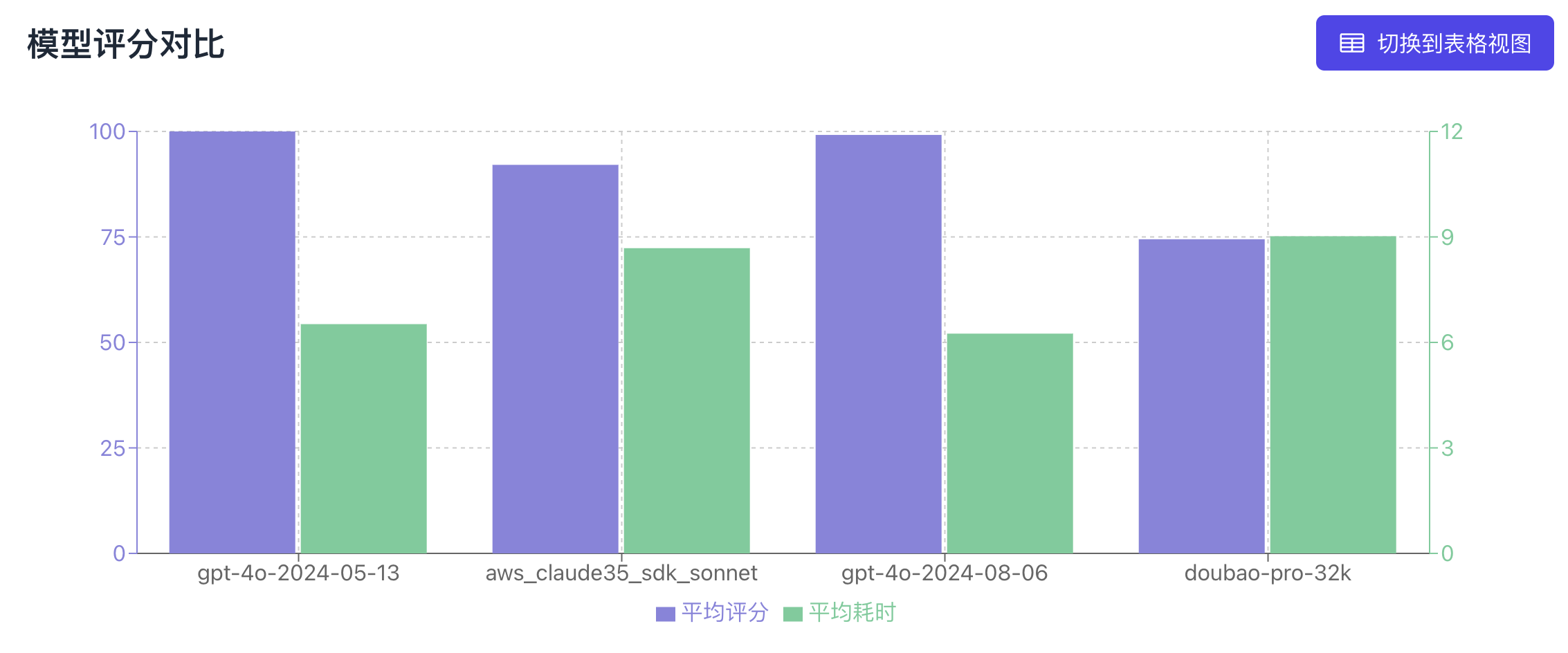

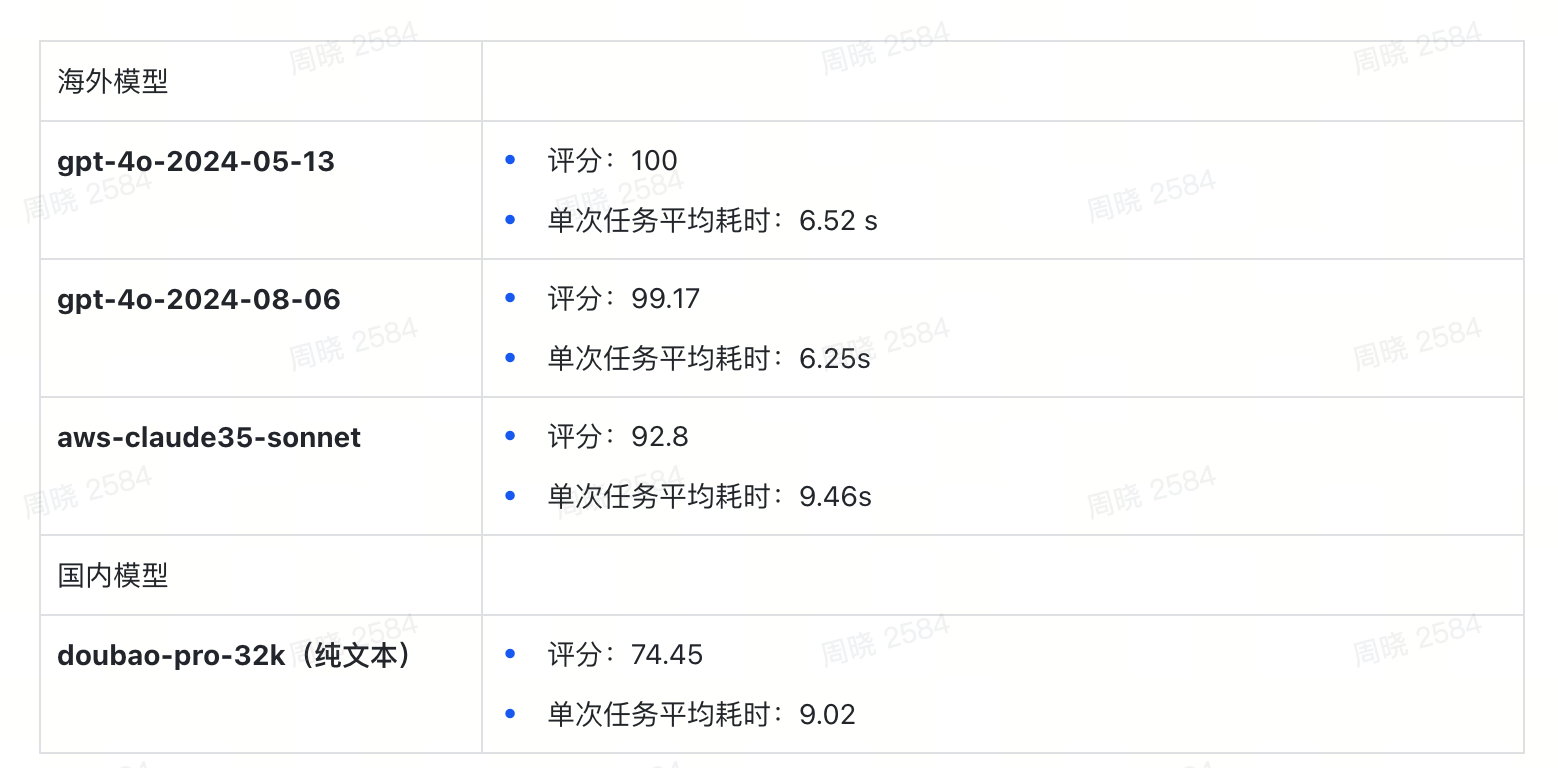

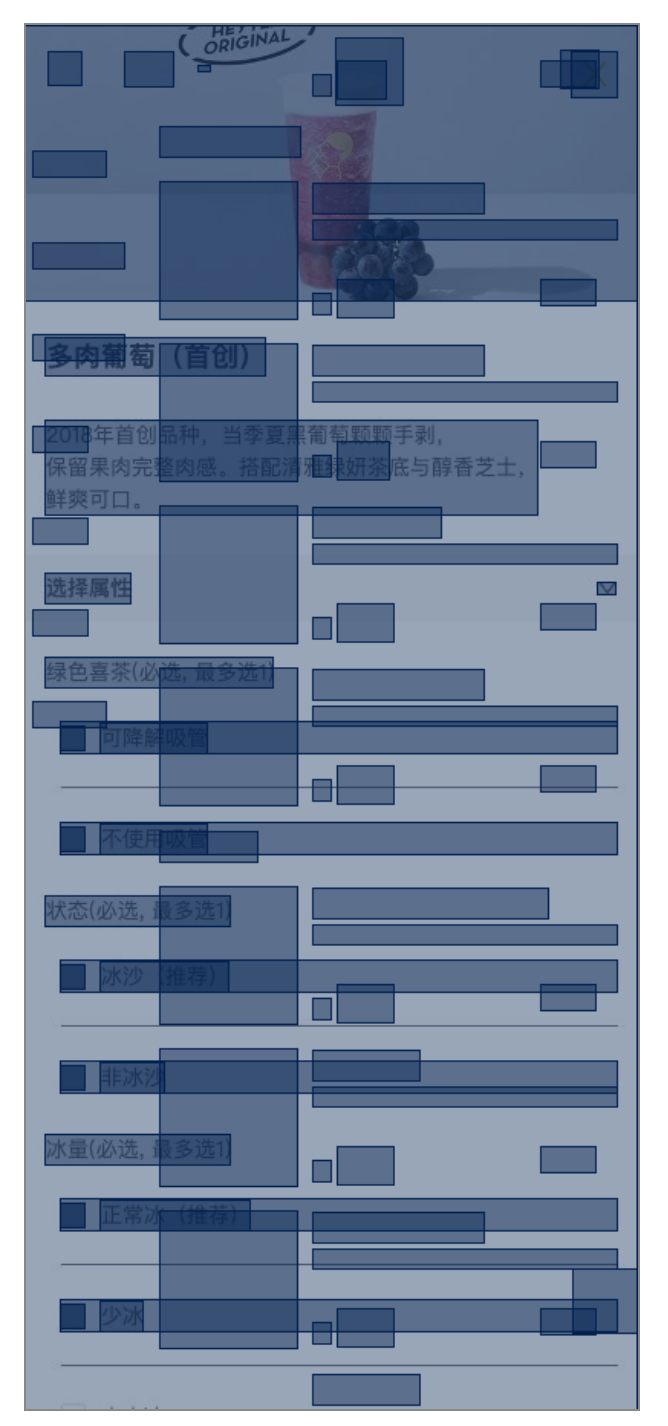

v0.6.0 - Doubao model support

Doubao model support

- Support for calling Doubao models, reference the environment variables below to experience.

Summarize the availability of Doubao models:

-

Currently, Doubao only has pure text models, which means "seeing" is not available. In scenarios where pure text is used for reasoning, it performs well.

-

If the use case requires combining UI analysis, it is completely unusable

Example:

✅ The price of a multi-meat grape (can be guessed from the order of the text on the interface)

✅ The language switch text button (can be guessed from the text content on the interface: Chinese, English text)

❌ The left-bottom play button (requires image understanding, failed)

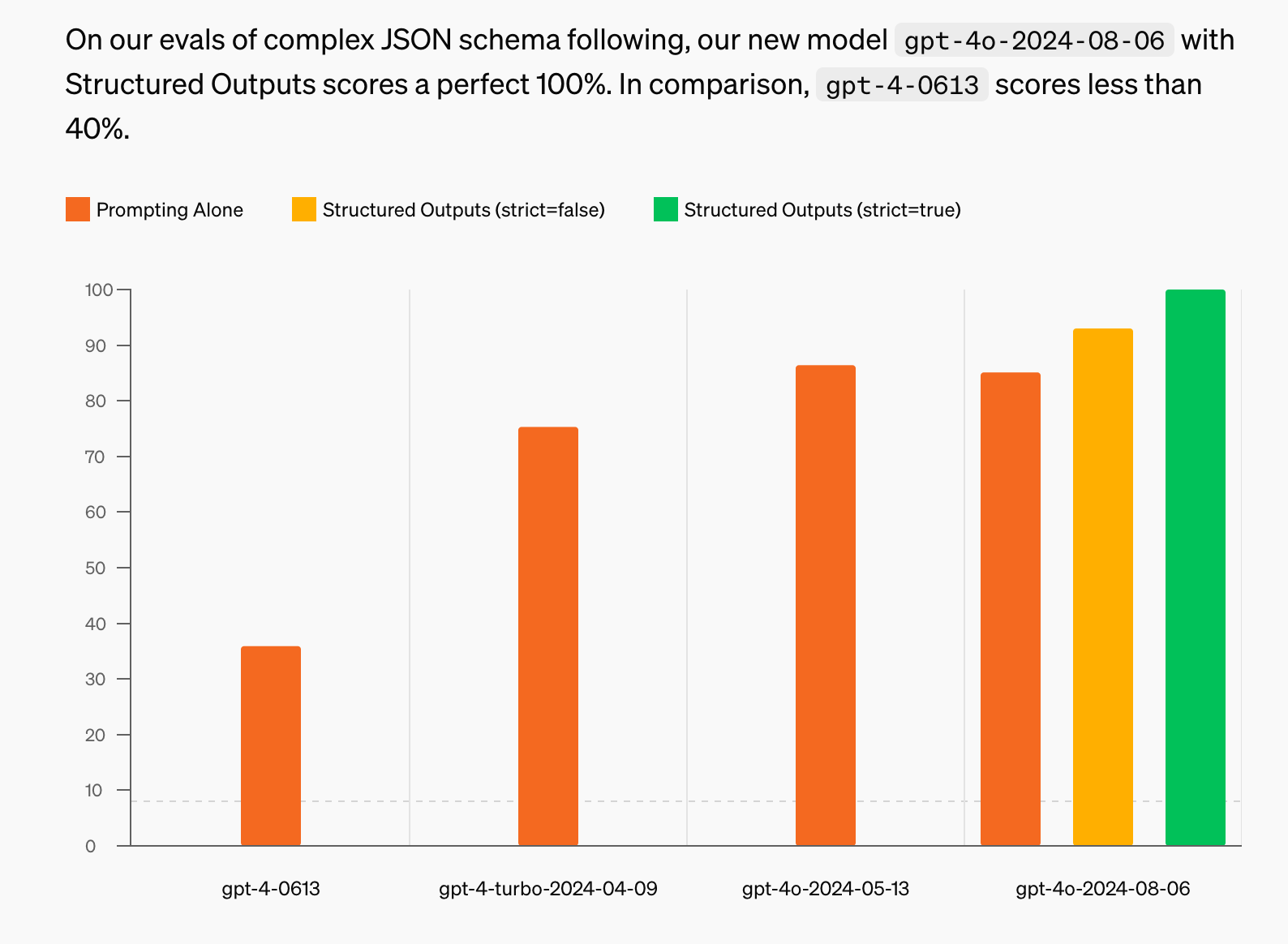

Support for GPT-4o structured output, cost reduction

By using the gpt-4o-2024-08-06 model, Midscene now supports structured output (structured-output) features, ensuring enhanced stability and reduced costs by 40%+.

Midscene now supports hitting GPT-4o prompt caching features, and the cost of AI calls will continue to decrease as the company's GPT platform is deployed.

Test report: support animation playback

Now you can view the animation playback of each step in the test report, quickly debug your running script

Speed up: merge plan and locate operations, response speed increased by 30%

In the new version, we have merged the Plan and Locate operations in the prompt execution to a certain extent, which increases the response speed of AI by 30%.

Before

after

Test report: the accuracy of different models

-

GPT 4o series models, 100% correct rate

-

doubao-pro-4k pure text model, approaching usable state

Problem fix

- Optimize the page information extraction to avoid collecting obscured elements, improving success rate, speed, and AI call cost 🚀

before

after

v0.5.0 - Support GPT-4o structured output

New features

- Support for gpt-4o-2024-08-06 model to provide 100% JSON format limit, reducing Midscene task planning hallucination behavior

- Support for Playwright AI behavior real-time visualization, improve the efficiency of troubleshooting

- Cache generalization, cache capabilities are no longer limited to playwright, pagepass, puppeteer can also use cache

-

Support for azure openAI

-

Support for AI to add, delete, and modify the existing input

Problem fix

-

Optimize the page information extraction to avoid collecting obscured elements, improving success rate, speed, and AI call cost 🚀

-

During the AI interaction process, unnecessary attribute fields were trimmed, reducing token consumption.

-

Optimize the AI interaction process to reduce the likelihood of hallucination in KeyboardPress and Input events

-

For pagepass, provide an optimization solution for the flickering behavior that occurs during the execution of Midscene

v0.4.0 - Support CLI usage

New features

- Support for Cli usage, reducing the usage threshold of Midscene

-

Support for AI to wait for a certain time to continue the subsequent task execution

-

Playwright AI task report shows the overall time and aggregates AI tasks by test group

Problem fix

- Optimize the AI interaction process to reduce the likelihood of hallucination in KeyboardPress and Input events

v0.3.0 - Support AI report HTML

New features

- Generate html format AI report, aggregate AI tasks by test group, facilitate test report distribution

Problem fix

- Fix the problem of AI report scrolling preview

v0.2.0 - Control Puppeteer by natural language

New features

-

Support for using natural language to control puppeteer to implement page automation 🗣️💻

-

Provide AI cache capabilities for playwright framework, improve stability and execution efficiency

-

AI report visualization, aggregate AI tasks by test group, facilitate test report distribution

-

Support for AI to assert the page, let AI judge whether the page meets certain conditions

v0.1.0 - Control Playwright by natural language

New features

-

Support for using natural language to control puppeteer to implement page automation 🗣️💻

-

Support for using natural language to extract page information 🔍🗂️

-

AI report visualization, AI behavior, AI thinking visualization 🛠️👀

-

Direct use of GPT-4o model, no training required 🤖🔧